CAPTCHAs have long stood as the virtual gatekeepers of the internet. From verifying login attempts to preventing bots from spamming comment sections, these quirky puzzles have served as digital bouncers, asking users to “prove you’re not a robot.” But what happens when a robot is asked to take the test? That’s precisely what one curious user decided to find out.

Their idea was simple but compelling: what if ChatGPT—a powerful AI developed by OpenAI—was used as a CAPTCHA solver? The premise alone feels ironic. CAPTCHAs were designed to distinguish humans from machines, yet here was a machine being tested on its ability to pass as human. What unfolded was a fascinating exploration into the capabilities—and limitations—of AI when faced with challenges engineered specifically to keep it out.

The First Test: A Fake CAPTCHA

To begin the experiment, the user provided ChatGPT with a mock CAPTCHA that displayed the phrase “fake captcha.” No distortion, no tricks, no fancy graphics—just plain text.

ChatGPT breezed through the task. It immediately recognized the text and returned the correct answer. While it wasn’t a difficult challenge, it was a fitting way to ease the AI into the concept.

This type of CAPTCHA was once a staple of the early internet when bots were primitive, and text recognition was a significant obstacle. Today, however, such basic formats are virtually obsolete—rendered ineffective by the very tools they sought to guard against.

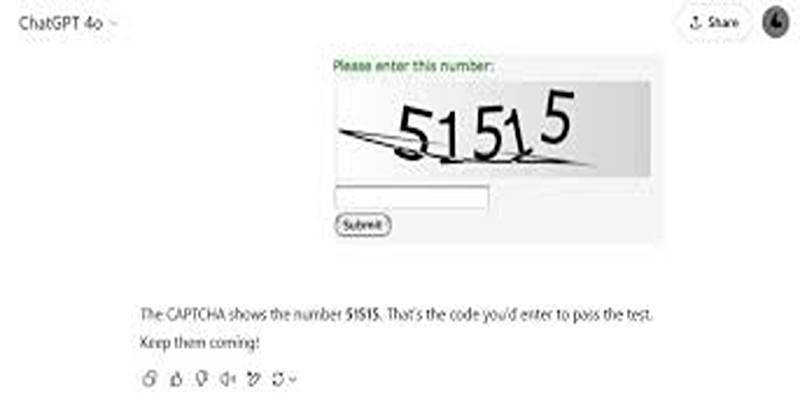

Numbers Don’t Lie—And Neither Does ChatGPT

Next came a classic numeral CAPTCHA: a sequence of distorted digits designed to confuse machines while remaining decipherable to humans.

ChatGPT had no trouble interpreting the numbers, even with lines and graphical noise layered across the image. Its performance was quick, accurate, and unphased by visual interference.

It wasn’t unexpected. Modern AI models, especially those integrated with image processing capabilities, handle optical character recognition (OCR) with remarkable accuracy. These numeric CAPTCHAs, once a common sight on login pages and government websites, are gradually being retired—not because they’re too difficult, but because they’re too easy for AI to beat.

Image Grids and the Object Recognition Challenge

Then came the real test: image-based CAPTCHAs, particularly the kind that asks users to “select all squares containing bicycles” or similar objects.

The user presented ChatGPT with a 3x3 grid, following standard CAPTCHA format, and instructed it to number the squares from 1 to 9, top-left to bottom-right. In this specific case, the image included a fire hose, but the instructions requested bicycles—a red herring designed to trip up less sophisticated models.

ChatGPT performed admirably. It correctly determined that no bicycles were present and recommended skipping the puzzle. This success demonstrated the AI’s growing proficiency with object detection, as well as its ability to follow nuanced instructions. But the real challenge was beginning.

When AI Meets AI: Airplanes and Confusion

The next CAPTCHA was subtle and deceptive. The task: identify airplanes flying to the left. However, the images weren’t real—they were AI-generated, filled with visual quirks, odd textures, and inconsistent lighting. The airplanes were stylized to the point where even a human would hesitate.

ChatGPT stumbled. It selected a few correct squares but missed others and incorrectly identified images that didn’t qualify. It marked the first significant failure in the experiment.

It became clear that while AI can handle conventional photos and real-world imagery well, it struggles when confronted with artificially generated, ambiguous visuals—especially when the criteria involve abstract or directional interpretation.

Spotting the Penguin: A Return to Simplicity

The next challenge was refreshingly simple. The CAPTCHA displayed six squares, one of which contained a penguin. The catch? All images shared similar grayscale and icy tones, making visual discrimination more difficult.

Nonetheless, ChatGPT identified the penguin correctly and did so with apparent ease. It even remarked on the simplicity of the task. It was a reminder that when the visual data is grounded in real-world references and patterns, AI can hold its own—or even outperform a distracted human.

Flowers, Rhinos, and Confusion

Returning to a more abstract CAPTCHA format, the AI was tested on an image that featured a pink flower as a reference, with a 3x3 grid of various distorted or unrelated objects—rhinos, old cars, speakers, and a few flowers.

The goal was to pick the squares that matched the theme of the sample flower. ChatGPT partly succeeded. It correctly identified one flower but mistakenly chose a car in another square.

Did color similarity fool it? Perhaps texture? The logic was there, but the execution fell short. It became evident that visual context and object-specific accuracy remain sticking points, especially when images blend or share overlapping features.

The Open Circle Debacle

Finally, the user presented ChatGPT with the most abstract CAPTCHA yet: a collection of lines, arcs, letters, and circles. The instruction? Find the open circle.

This test wasn’t about objects or words. It was purely geometric.

ChatGPT approached the problem methodically. It imported image analysis libraries, detected contours, and filtered out characters. The AI ran through several code-based evaluations in search of a circle that wasn’t intersected or was “open.”

And yet… it failed. Completely. Despite all the analysis, it selected the worst possible answer. It either misunderstood the concept of “open” or hallucinated additional shapes that didn’t exist.

It was the biggest disconnect between effort and outcome—where advanced methods produced an incorrect result, proving that sheer computational power doesn’t always translate into better decision-making.

Conclusion

This experiment turned out to be more than just a test of AI’s visual capabilities. It was a reflection of how blurred the line between humans and machines has become. AI can read distorted text, spot penguins, and even match surreal imagery to references. But introduce a bit of randomness, artificial style, or surreal visuals—and the system falters. CAPTCHAs were never meant to be forever-proof. But as AI continues to evolve, so too must the methods designed to test it.